Stop using Redis across a network!

Redis is a powerful tool when used correctly, however a growing trend is to use it in the worst way possible.

When building software, you constantly need to decide which tradeoffs best fit your needs. If you're building a service, you need to accept a network request via API, check a database, add in a cache to optimize repeat queries or session storage. Redis is a great option for managing caching or other frequently accessed data because it is very fast and runs in memory. Redis calls can run in ~0.3ms (assuming some slow down for processing time). Now imagine you decide to use Redis over the network (ie: "hosted" or "as a service"), you have introduced network latency to that call.

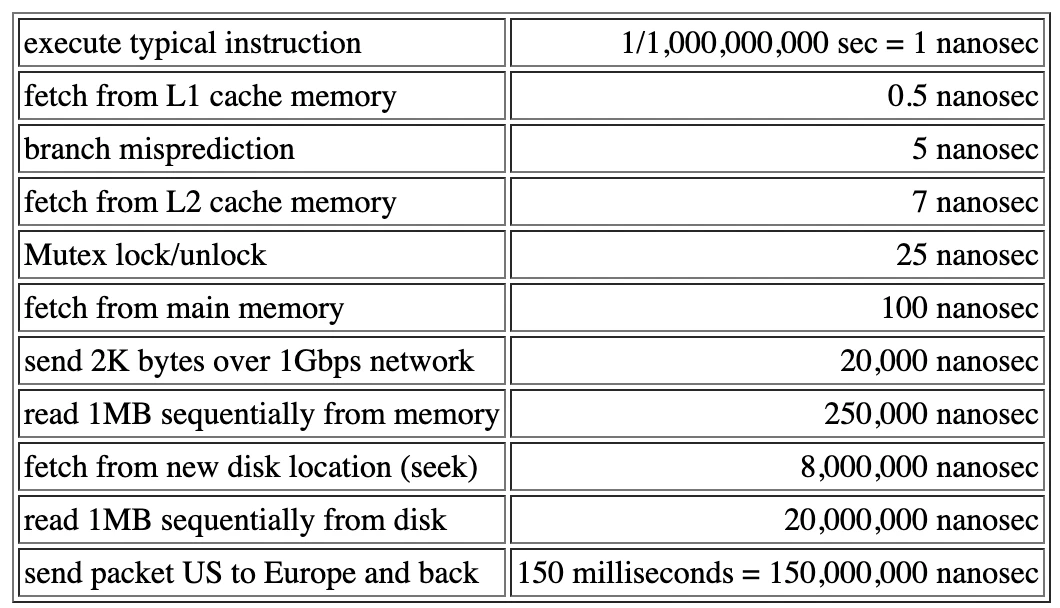

Peter Norvig provided a great footnote on speed of various operations on a PC.

Network latency is a big variable, because it depends on where the hosted service is when compared to your API server. Best case scenario, it is in the same data center, so you only have 0.5ms of latency added. In that case, you've increased the per-call time from 0.3ms to 0.6ms, a 2x increase. Worst case scenario, it is not in the same data center and you are spending 20+ms per request round trip, 60x+ increase.

If you only need to make a single call, it's not a big deal, but you would be better off using your local disk than a Redis hosted on another machine. If you are doing enough processing that you need to make a dozen calls, a hundred calls, or a thousand calls, the latency cost is astronomically higher than using a local instance on the API server.

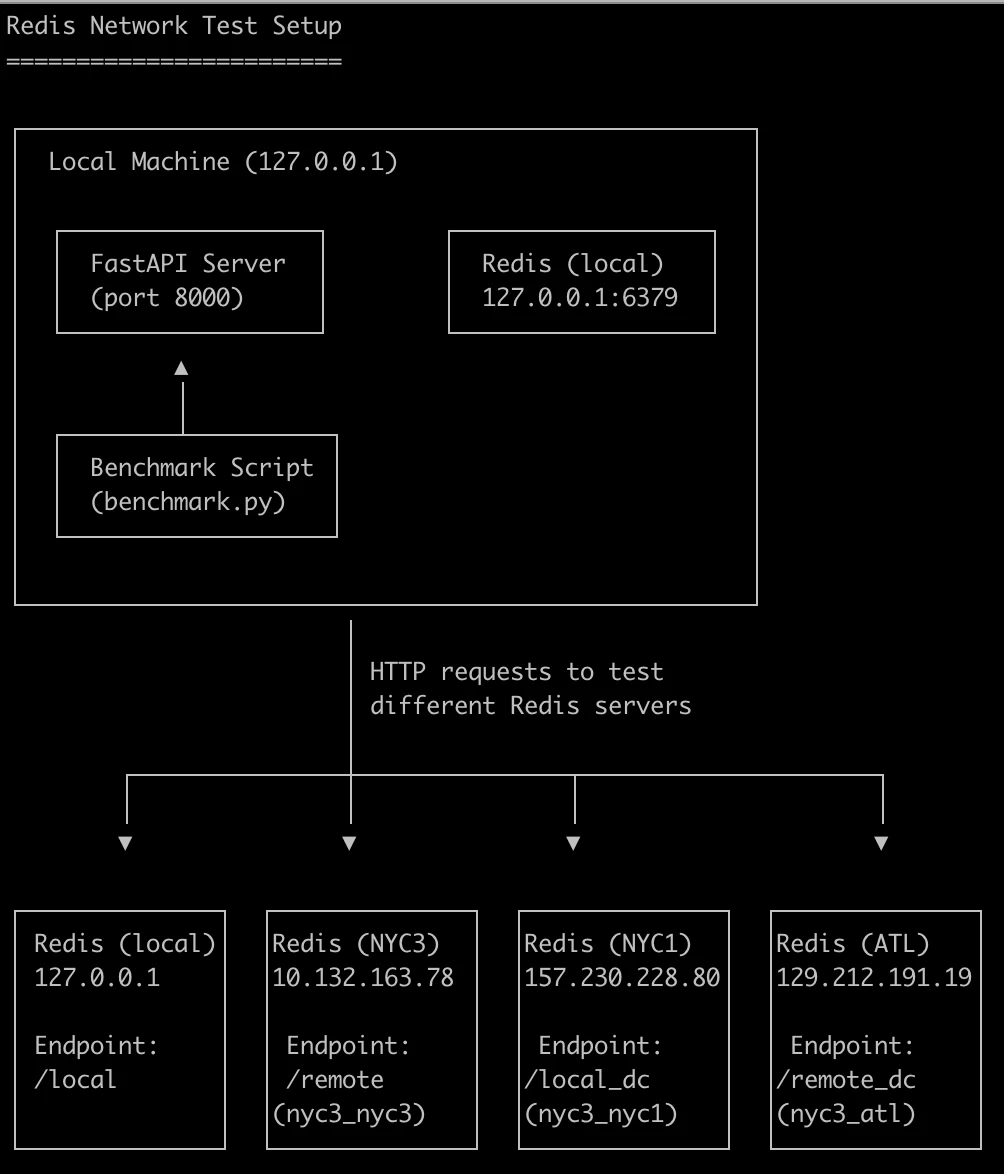

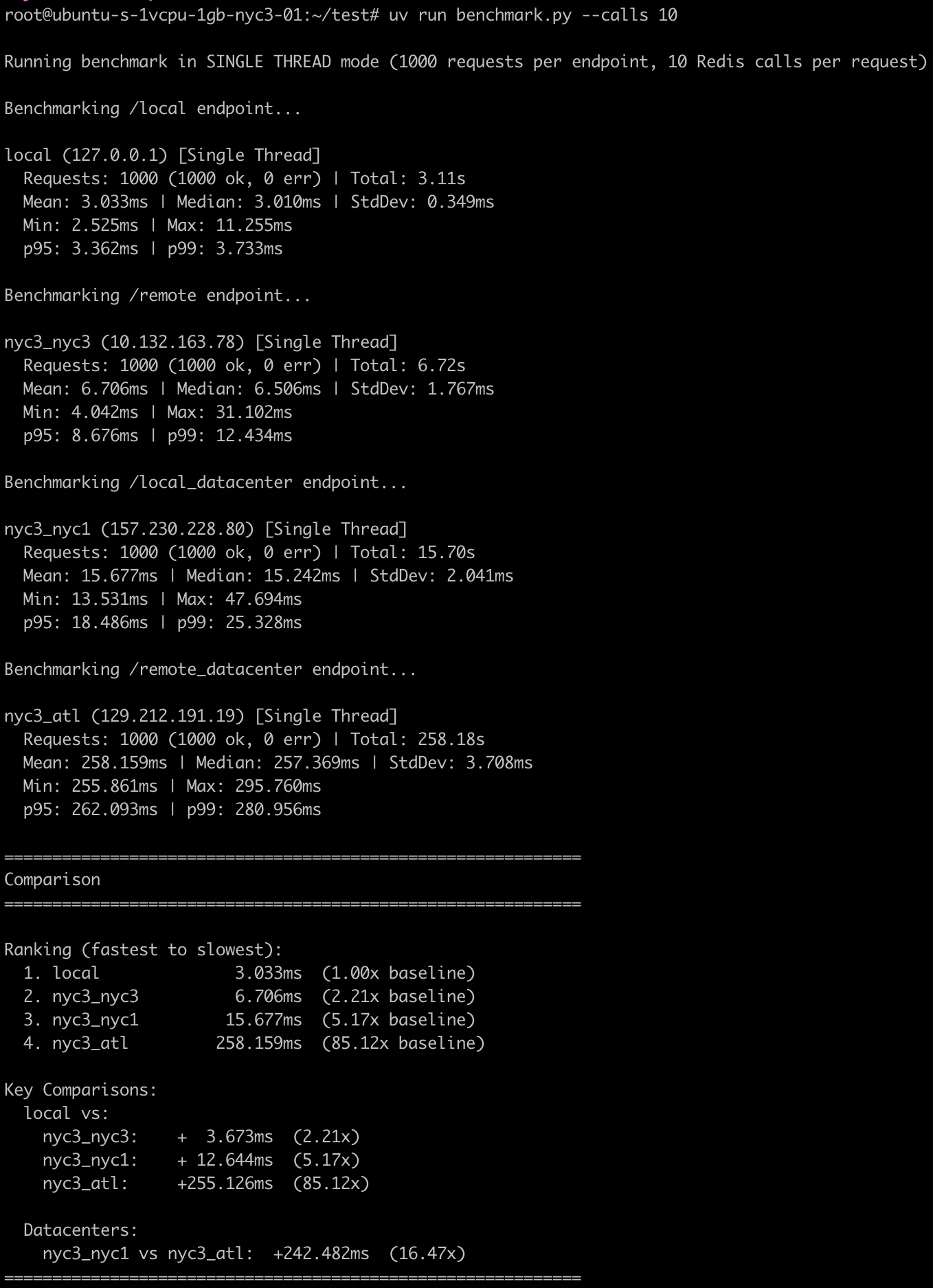

For the sake of demonstration, I setup 4 servers on digital ocean with 3 in the same region and 1 in another region. I'm using 2x $6/month nodes in nyc3, 1 in nyc1 and 1 in the atlanta region with 1gb ram and 1 cpu each. I installed Redis on all servers and on the "local host", python 3.13.9 in uv on the API server running FastAPI.

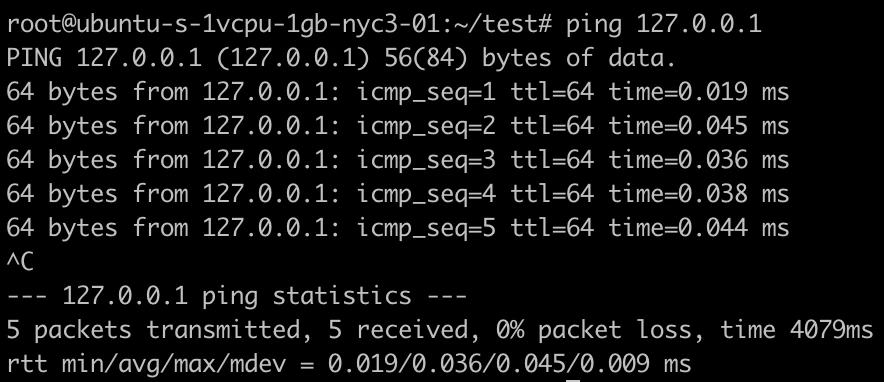

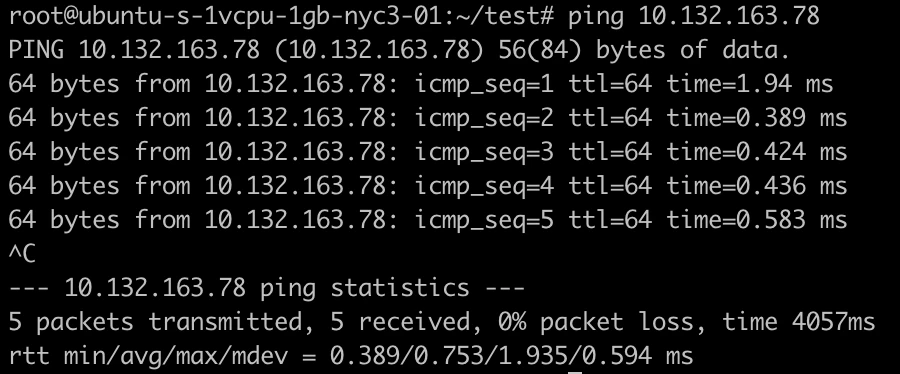

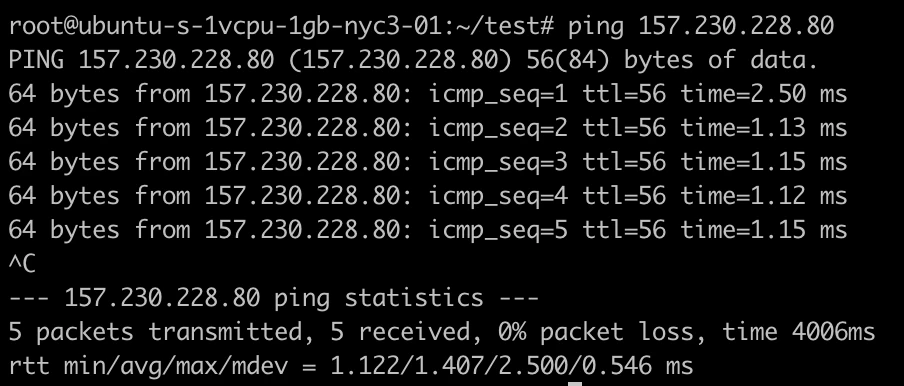

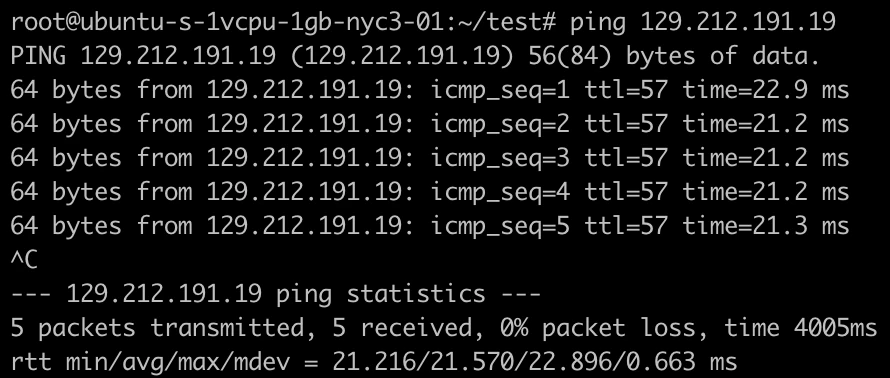

First test is just a simple ping, to get an idea for our network latency

Local loopback tends to be fairly quick!

In this case, presumably since I created both machines at the same time (nyc3<->nyc3), they're probably on the same physical host. We're seeing ~0.4ms.

This is from nyc3 to nyc1, slightly slower but likely physically still very close.

This is from nyc3 to atlanta, which gets us up to 21ms on average.

This shouldn't be a big surprise, but doing 10 calls 1000 times in a row (10k calls total) takes just 3.1 seconds when it's all on the same machine. Introducing the 0.5ms network latency for a nearby remote redis doubles it to 6.72 seconds. nyc3->nyc1 up to 15.7 seconds. And of course the most atrocious is nyc3 -> atlanta at 258 seconds.

TLDR: you should always use redis on the same physical host, or you're introducing a lot of latency to your services.